How can we improve the quality of post-election survey data on electoral turnout? That is the core question of our recent paper. We present a novel way to question citizens about their voting behaviour that increases the truthfulness of responses. Our research finds that the inclusion of “face-saving” response items can drastically improve the accuracy of reported turnout.

Usually, the turnout reported in post-election surveys is much higher than in reality, and this is partly due to actual abstainers pretending that they have voted. Why do they lie? In many countries, voting is a social norm widely shared by the population. In established democracies voting is considered a duty, and is part and parcel of being a “good citizen”. Public discourse, educational programs, media campaigns and family discussions help disseminate this norm in the population. It is therefore not surprising that some abstainers prefer to lie about their non-voting behaviour when asked.

Yet the story does not end there. Previous research has shown that some people lie even when they complete an online questionnaire, a context where there is no interaction with the interviewer and no risk of being judged (Ansolabehere and Hersh 2012). Theory suggests that another psychological mechanism is at play here: the desire to preserve self-esteem. Some of the abstainers buy into the social norm of voting as a duty, and/or feel the pressure of their friends and family to conform to the norm. Some abstainers thus lie because they prefer to avoid the discomfort they would feel when admitting that they derogate from a norm they endorse, even if no external person can judge their response.

One way to reduce the abstainers’ tendency to lie is to frame the turnout question in such a way that these abstainers are given the opportunity to claim that they adhere to the norm even if they did not vote. Abstainers who accept the social norm can save face even if they report their true voting behaviour.

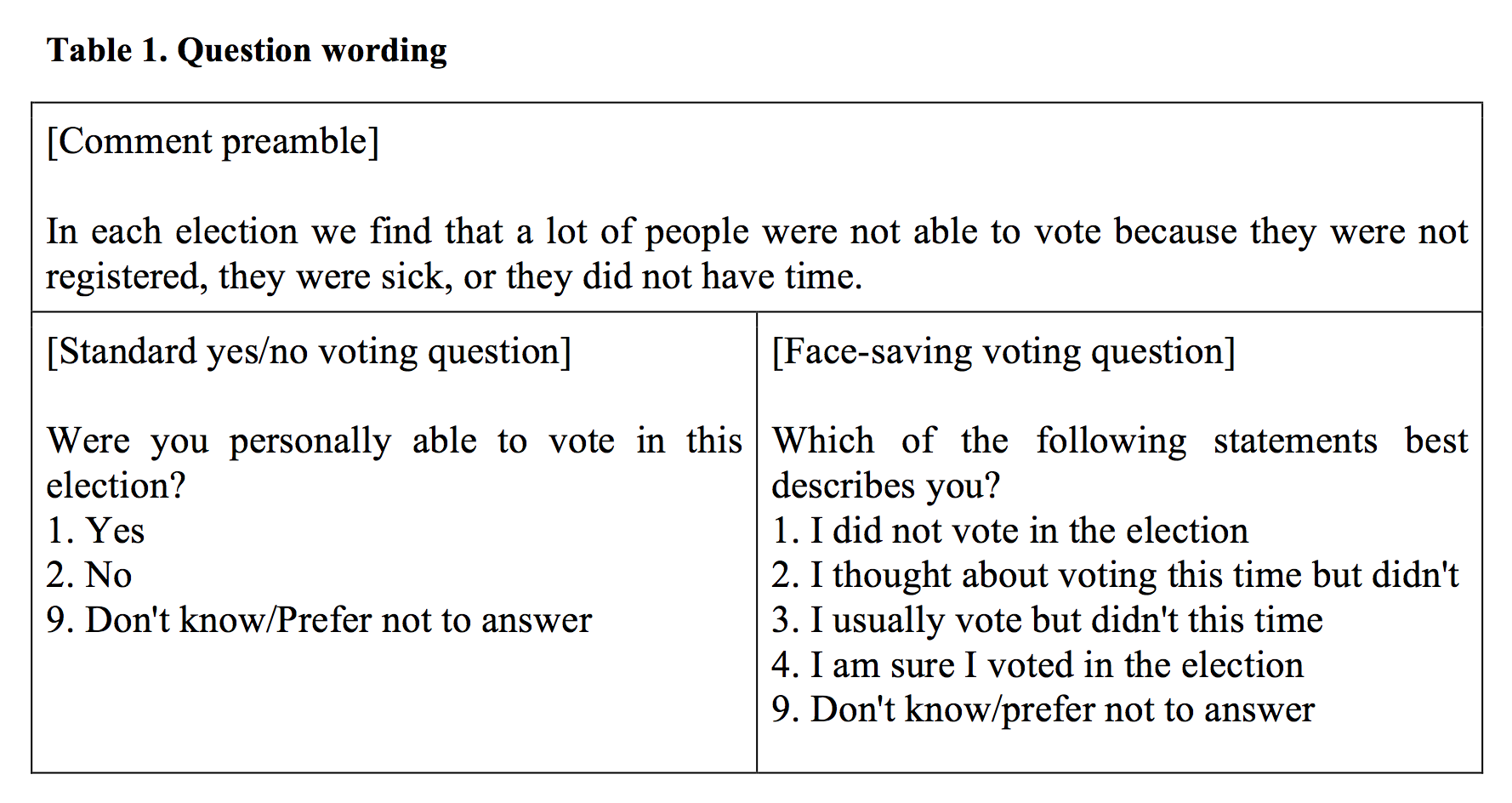

To test the efficacy of this framing, we ran a survey experiment, where half of respondents are randomly assigned to the classic “yes/no” turnout question, while the other half is presented with a new question. If the new question shows a reported turnout that is closer to the actual turnout, it is presumed that the new version succeeds in reducing lies and thus produces more accurate survey answers. Various studies conducted in the United States have tested which question best succeeds in reducing abstainers’ tendency to lie. Table 1 presents the “classic” turnout question and the “face-saving” turnout question wording, which is the alternative question that has shown the most promising results so far.

Results from the United States suggest that including face-saving response items in surveys following national elections can reduce reported turnout by a ranger of four to eight percentage points (e.g. from 84 per cent with the yes/no question to 76 per cent with the face-saving question, see Duff et al. 2007 as well as Belli, Moore and VanHoewyk 2006).

However, we do not know whether this new version of the turnout question is also efficient in other countries, and whether face-saving response items can also reduce abstainers’ tendency to lie for elections at other levels of government, such as local, regional, or European Elections. Our research project aims to fill this gap by conducting nineteen surveys experiments in post-election surveys in Canada, France, Spain, Switzerland and Germany. In particular, two surveys were produced after municipal elections (in France), seven surveys after regional elections (in Canada, Switzerland, Germany, and Spain), six surveys after national elections (in France, Spain, and Switzerland), and five surveys after the 2014 European election (in France, Spain and Germany).

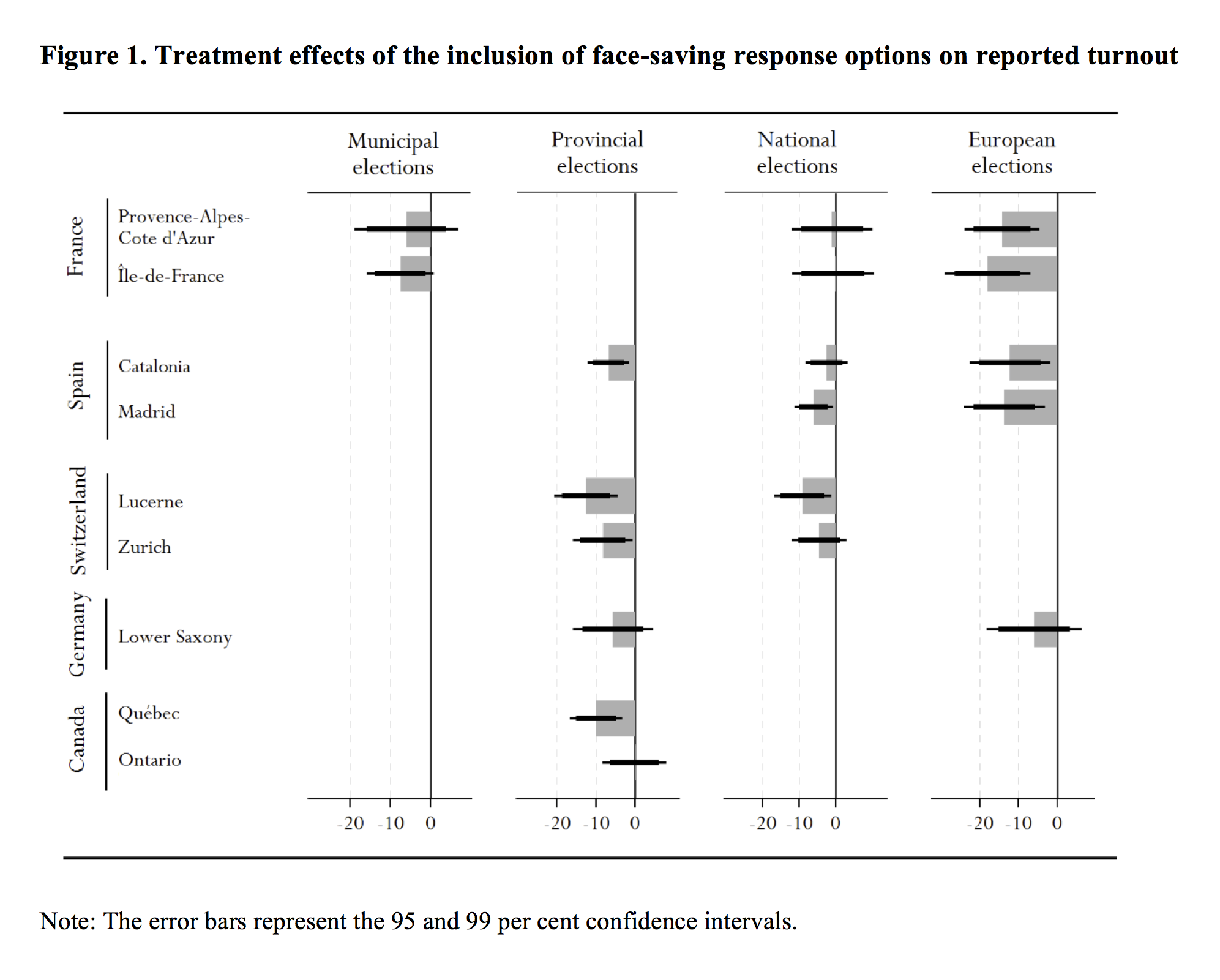

Figure 1 presents the results for each of these nineteen survey experiments. The top and the left margins identify the country and the level of the election; the bars represent the difference in percentage points between the turnout measured in the group exposed to the yes/no question and the one measured in the group exposed the question that included face-saving response items.

In eleven out of nineteen surveys, the inclusion of face-saving response items significantly reduced the reported turnout. In four other surveys, the effect goes in the expected negative direction, even if it does not reach conventional levels of statistical significance. Finally, in four other surveys, the new question produced a turnout level that is virtually identical to the one produced by the traditional yes/no question. When we combine the nineteen datasets into a single large dataset and run one large experiment, we find that the face-saving question reduces reported turnout by 7.59 percentage points (p<0.001, N=15,185).

That being said, our results show important variations in effect sizes from surveys to surveys (see Figure 1). In our paper, we further explore the potential sources of these variations. Nevertheless, our main finding is that, in the majority of our nineteen survey experiments, the face-saving question was successful at reducing turnout over-reporting compared to the classic yes/no question. Admittedly, in a few experiments, the two versions of the turnout questions showed similar levels of turnout. However, we find no indication suggesting that using the face-saving question could have the unwanted effect of increasing reported turnout rather than reducing it. Thus, we can say that at best, the new question helps at collecting more honest answers; at worse, it has no effect. In light of our results, “we recommend that electoral studies outside the United States offer respondents face-saving options when asking them to report their voting behaviour in post-election surveys.”

References:

Ansolabehere, Stephen, and Eitan Hersh. 2012. “Validation: What big data reveal about survey misreporting and the real electorate.” Political Analysis 20 (4): 437-459 http://dx.doi.org/10.1093/pan/mps023

Belli, Robert F., Sean E. Moore, and John VanHoewyk. 2006. “An experimental comparison of question forms used to reduce vote overreporting.” Electoral Studies 25(4):751–59. http://dx.doi.org/10.1016/j.electstud.2006.01.001

Duff, Brian, Michael J. Hanmer, Won-Ho Park, and Ismail K. White. 2007. “Good excuses: Understanding who votes with an improved turnout question.” Public Opinion Quarterly 71(1):67–90. http://dx.doi.org/10.1093/poq/nfl045

Morin-Chassé, Alexandre, Damien Bol, Laura Stephenson and Simon Labbé St-Vincent. Forthcoming. “How to survey about electoral turnout? The efficacy of the face-saving response items in nineteen different contexts.” Political Science Research Methods (accessible online ahead of print). http://dx.doi.org/10.1017/psrm.2016.31